About TimVideos.us

TimVideos.us is a group of exciting projects which together create a system for doing both recording and live event streaming for conferences, meetings, user groups and other presentations. We hope that, through our projects, the costs and expertise currently required to produce live streaming events will be reduced to near zero.

HDMI2USB is a part of various things done by the organization. The HDMI2USB project develops affordable hardware options to record and stream HD videos (from HDMI & DisplayPort sources) for conferences, meetings and user groups.

Description copied from: https://hdmi2usb.tv/home/

Original Goals

My project is titled “Add hardware mixing support to HDMI2USB firmware” and had the aim of providing additional support to crop/pad/scale to HDMI2USB-Litex-Firmware and later on Developing a hardware mixer block.

Expected results

- The HDMI2USB Litex Firmware supports crop/pad/scale feature .

- The firmware command line has the ability to specify that an output is the combination of two inputs. These combinations should include dynamic changes like fading and wipes between two inputs.

Achieved Result

1) Used the CPU for manipulating the framebuffer to achieve Crop/Pad/Scale.

2) Cropping: Clipping off from all sides( top – 40px , bottom-40px , left-40px, right-40px ) worked fine as shown:

3) Code : https://github.com/timvideos/HDMI2USB-litex-firmware/pull/444

Working :

a) With Command x c pattern output 0

b) With Command x c input0 output0

Video Link : https://www.youtube.com/watch?v=p3Bl_UAnbkM

4) CPU method resulted in failure because CPU inside the HDMI SoC is not fast enough to do manipulation of the full FPGA frame which will slow down the process .

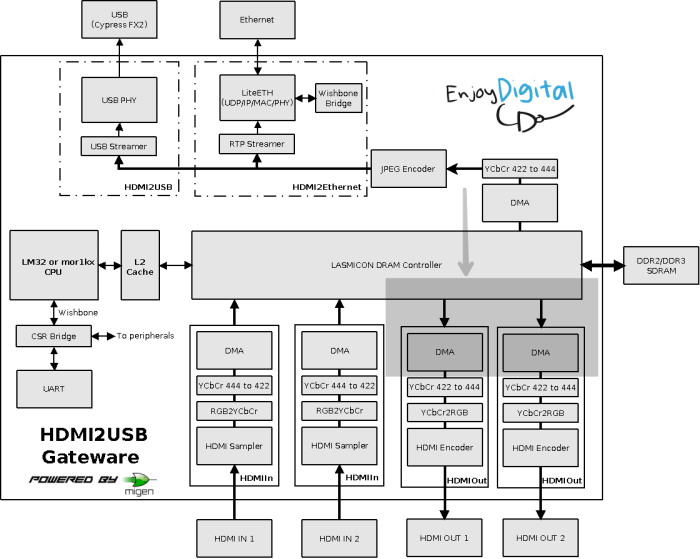

5) In order to implement crop/pad/scale in a faster way HDL was modified (especially the DMA section ). https://github.com/enjoy-digital/litevideo/pull/20

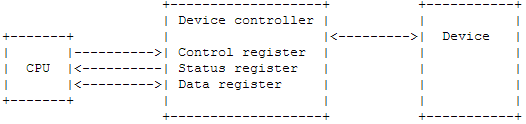

The Cropping module is implemented between the DMA Reader and VideoOut core. in HDL Code. VideoOutCore generates a Video Stream from Memory (DRAM Controller),

As highlighted in figure below: Architecture of HDMI2USB Gateware.

Next step : I had planned to get the following merged into the codebase:

- Creating/Using CSR registers to make cropping dynamically configurable.

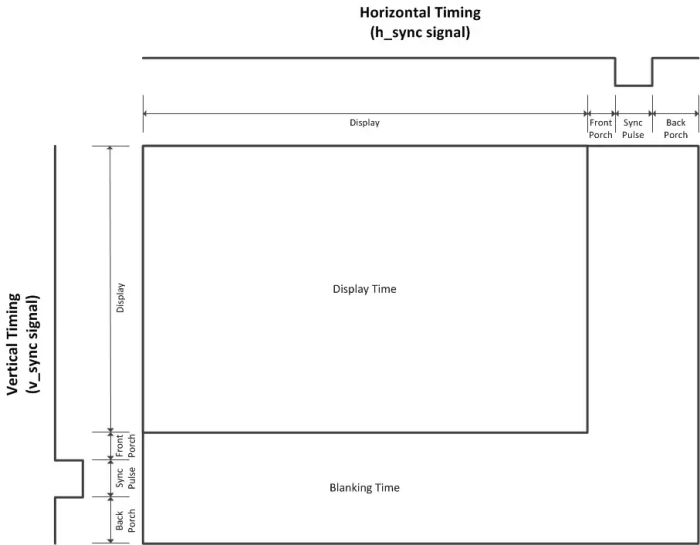

- For Scaling: Supporting cropping with horizontal(h)/vertical(v) resolution that is h/v full resolution divided by an integer. For example, hres_crop = hres/N, vres_crop = vres/M.This way pixel would be copied N times (for h) and for v line buffer is required that will be reuse M times.

But I felt that due to time constraints this won’t be possible. I will be working on this after GSoC. This is work in progress.

6) Documentions :

Corrected Errors :

https://github.com/timvideos/HDMI2USB-litex-firmware/pull/443

https://github.com/timvideos/HDMI2USB-litex-firmware/pull/445

Addition in Documentations :

https://github.com/timvideos/HDMI2USB-litex-firmware/pull/446

7) HDMI2USB-mask-generation is implemented to generate wipes in the manner as explained in the hardware fader design doc , which will be later on used to generate transition effects

Major Task Left

1) Completion of HDL code for implementation on adding crop / scale on Hardware.

2) Due to Major changes in Codebase , “unforking” LiteX and Migen+MiSoC as described in mail , ssk1328 work on Hardware Fader Design has to be reproduced/ integrated in current codebase .

Link to code

Main Code :

https://github.com/Nancy-Chauhan/HDMI2USB-litex-firmware

https://github.com/Nancy-Chauhan/litevideo

Pull request

https://github.com/timvideos/HDMI2USB-litex-firmware/pull/445

https://github.com/enjoy-digital/litevideo/pull/20

https://github.com/timvideos/HDMI2USB-litex-firmware/pull/444

https://github.com/Nancy-Chauhan/hdmi2usb-mask

Merged :

https://github.com/timvideos/HDMI2USB-litex-firmware/pull/446

https://github.com/timvideos/HDMI2USB-litex-firmware/pull/443

Other Links :

Learning

Doing this project I got an opportunity to learn a lot of things . The number of such things is more than I can even write but summing up all this the major things which I learn includes :

- Handling large code bases, this was the first time that I worked with large code base.

- Putting your doubts in front of others and establishing communication amongst organisation members as during this period a number of times I had to ask different organisation members.

- Importance of documentation as during this period I referred to some of the other works .

- In Earlier stage of my Project, I was using the CPU to manipulate pixels in the memory buffer . But I learned that the CPU inside the HDMI SoC is not fast enough to do manipulation of the full FPGA frame. It can only be used for small things. The DMA HDL code was meant to be modified which takes the pixels from the HDMI input and writes them to memory and implementing it in HDL makes the process faster .

- Learned about Migen which makes it possible to apply modern software concepts such as object-oriented programming and metaprogramming to designing hardware. It is more intuitive and and provides a nice abstraction layer so that we can focus more on the logic part

- I had planned to also complete the Hardware Mixer Block, but I felt that due to time constraints this won’t be possible. I will work on this after GSoC.

Conclusion

I would like to thank my mentor Kyle Robbertze and Tim ‘mithro’ Ansell for the support and the help they provided. Mentor Kyle was always there for me, no matter what the problem was.Thanks to Organisation members: CarlFK, Rohit Singh, Florent who have always responded to my queries related to my project . It would not have been possible without them. I have learned a lot from this project. As such, I have all intentions to complete all the major tasks left in the project .

Contact details

If you have any doubts or suggestions you can contact me anytime you want. Here are the details :

Email address : nancychn1@gmail.com

GitHUB : Nancy-Chauhan

IRC Nickname : nancy98